To create an Ubuntu VM for a Kubernetes cluster using Proxmox, follow these steps: download and tweak the base image, sysprep it, create a template with specified configurations, and clone the VM. Adjust settings such as memory, storage, and IP configurations. Fix shared IP issues by resetting the machine ID.

I needed to create a few Ubuntu VMs for a Kubernetes cluster for testing, and I wanted to make the process as simple as possible using Proxmox and some minimal automation. Here’s what I’ve done:

First, Download the base image:

wget https://cloud-images.ubuntu.com/jammy/current/jammy-server-cloudimg-amd64.img

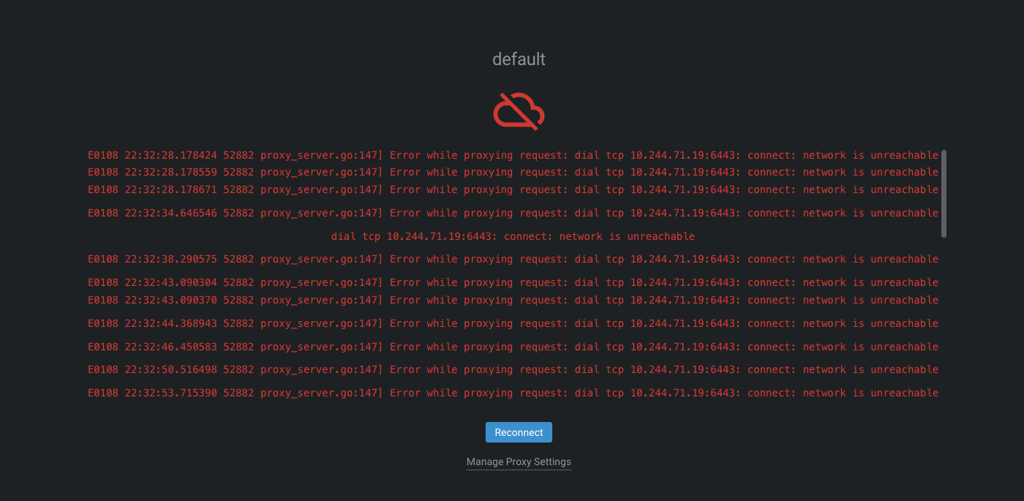

Then, tweak the image. Since I’m using my apt-cacher-ng proxy here, I’ve set the proxy for all VMs. You can remove it or adjust it as needed. If you want to remove it, simply remove the append-line option. Additionally, I’m installing qemu-guest-agent here. You can add any additional items at this point if desired.

sudo virt-customize -a jammy-server-cloudimg-amd64.img --install qemu-guest-agent --append-line '/etc/apt/apt.conf.d/00proxy:Acquire::http { Proxy "http://10.244.71.182:3142"; };'

Sysprepping the image resets it to the default stage. If you don’t perform this step, and you clone the machine multiple times, all the clones will have the same machine ID and IP address. [Note: This isn’t working fully for me. See below for the changes I made to the machine ID.]

sudo virt-sysprep -a jammy-server-cloudimg-amd64.img

Create the template. I used ID 9000 and assigned a name. You can modify this. Additionally, I’ve tagged mine with VLAN 72 (my Kubernetes VLAN). Feel free to change or remove this tag as needed. Furthermore, I set the disk size to add 50GB. Please replace any references to “godboxv2-tank” with your storage name.

sudo qm create 9000 --name "ubuntu-2204-cloudinit-template" --memory 4096 --cores 2 --net0 virtio,bridge=vmbr0,tag=72

sudo qm importdisk 9000 jammy-server-cloudimg-amd64.img godboxv2-tank

sudo qm set 9000 --scsihw virtio-scsi-pci --scsi0 godboxv2-tank:vm-9000-disk-0

sudo qm set 9000 --boot c --bootdisk scsi0

sudo qm disk resize 9000 scsi0 +50G

sudo qm set 9000 --ide2 godboxv2-tank:cloudinit

sudo qm set 9000 --serial0 socket --vga serial0

sudo qm set 9000 --agent enabled=1

sudo qm template 9000

Clone the VM into a new VM.

sudo qm clone 9000 2001 --name k8s-01

sudo qm set 2001 --sshkey godboxv3.pub

sudo qm set 2001 --memory 4096

sudo qm set 2001 --ciuser tiernano

sudo qm set 2001 --ipconfig0 ip=dhcp

Change tiernano and godboxv3.pub to your settings. Modify the names and memory as necessary.

As mentioned earlier, I’m still encountering the issue of IP addresses being shared. To resolve this, log into the boxes and execute the following command:

echo -n > /etc/machine-id

rm /var/lib/dbus/machine-id

ln -s /etc/machine-id /var/lib/dbus/machine-id

Reboot the computer, and the problem should be resolved.