Looks like there is a new release for ESXi 8.0. Seems to be mostly a DPU patch. Anyone running ESXi with DPUs might need to look into this…

Monthly Archives March 2023

Day 60 of #100daysofhomelab

Day 60 of #100daysofhomelab and I have been sick for most of the last 2 weeks, so that’s why I haven’t been posting much… Today is going to be links only too…

- Updates on the 3CX Security Alert for Electron Windows App – and the next few links are all about it… I use 3CX at home… Luckily I don’t use the desktop app (Phone app and their PWA app, but not the desktop one). If you do, start reading!

- 3CX VoIP Software Compromise & Supply Chain Threats (huntress.com)

- // 2023-03-29 // SITUATIONAL AWARENESS // CrowdStrike Tracking Active Intrusion Campaign Targeting 3CX Customers // : crowdstrike (reddit.com)

- Ironing out (the macOS details) of a Smooth Operator Objective-See’s Blog

- Tailscale Funnel now available in beta · Tailscale

- ASRock Rack GENOAD8UD-2T/X550 Genoa Motherboard Review (servethehome.com)

- From IP packets to HTTP: the many faces of our Oxy framework (cloudflare.com)

Day 59 of #100daysofhomelab – Proxmox Updates, LTT Hacked, New Framework Laptops

Day 59 of #100daysofhomelab and Proxmox released 7.4 of their Virtual Environment. I have not upgraded any of my machines to it, just yet, but that’s the plan for the weekend. Other than that, some links:

- The Ultimate Cheap Fanless 2.5GbE Switch Mega Round-Up (servethehome.com)

- Proxmox VE 7.4 Released with Dark Mode Support (servethehome.com)

- Framework’s Laptop 16 is a modular, upgradeable gaming laptop | Engadget

- Framework brings updated Intel and AMD chips to its modular laptop | Engadget

- The Linus Tech Tips YouTube channel is the latest to be taken over by hackers – Neowin

Day 58 of #100daysofhomelab

Day 58 of #100daysofhomelab and today is mostly a retrospective of what I did over the last few days, with some links thrown in for good measure…

Given I am going to keep GodBoxV3 running Windows Server 2022 for the foreseeable future, I installed Veeam Availability Suite (through their NFR program) and got it to backup up my Hyper-V VMs, along with my ESXi VMs to both local and Backblaze B2 storage. So far, so good.

Also, Ubiquiti released Unifi OS 3.0 for the UDM Pro, which I upgraded this morning. Links for that are below. Some nice bits in here, like:

- Added Wireguard VPN Server support.

- Added VPN Client Routing.

- Added Ad-blocking feature.

- Added support for OpenVPN tunnel in Traffic Routes.

- Allow adding multiple VPN Clients.

the 2.5 release OS had the VPN Client option, but ALL traffic went over the VPN, whether you wanted it to or not. This release gives you the option to say that traffic from a given host, network or even traffic to a given IP or range, goes over the VPN link. The Ad Block feature is nice too, but I have not tried it yet (still using PiHole for the moment) and the Wireguard VPN option is going to be VERY handy. More testing coming soon…

Anyway, on to the links.

Day 57 of #100daysofhomelab

Day 57 of #100daysofhomelab and its a link dump for today:

- Using DPUs Hands-on Lab with the NVIDIA BlueField-2 DPU and VMware vSphere Demo (servethehome.com)

- grafolean/grafolean: Easy to use monitoring system (github.com)

- ONE HUNDRED GIGABIT – MiktroTik CRS504-4XQ-I9 – YouTube

- I Bought the Last One Apple Ever Made… – YouTube – LTT tests out the Apple XServe

Day 56 of #100daysofhomelab

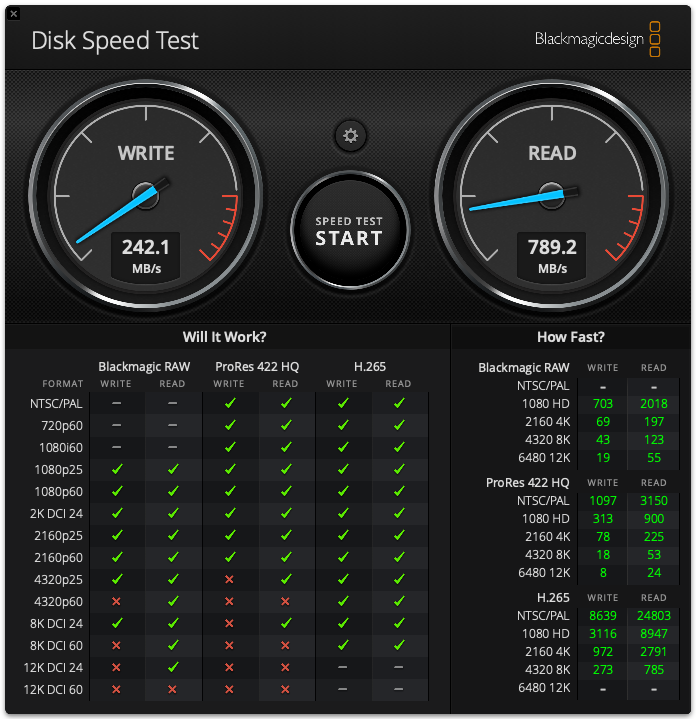

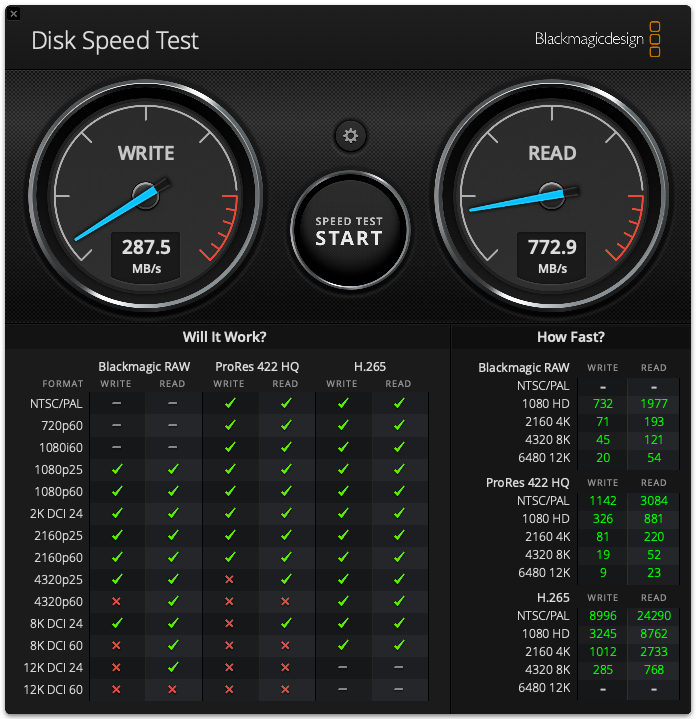

Day 56 of #100daysofhomelab and I managed to fix some stuff with my TrueNAS box. There was lots of messing when it came to permissions, but it works now. Some speeds are below. Not quite getting the speeds I was expecting, but there I have not tweaked anything, yet… This is going from my MacBook Pro with a 10Gb adapter. The reads are quite good, but the writes… well, the HDDs are FASTER than the NVMe… No idea why… I did get a new card to add another 4 NVMe drives in… We’ll see what happens when that gets built.

And now, the links:

Day 55 of #100daysofhomelab

Day 55 of #100daysofhomelab and it’s going to mostly link dump today… Still working on my TrueNAS stuff, hopefully, I can get a couple of blog posts about it soon enough… Anyway, on to the links.

- Uploading Large Files with AzCopy (markheath.net)

- SQL Server 2022: Intel® QuickAssist Technology overview – Microsoft SQL Server Blog

- tailscale/cpc: a copy tool (github.com)

- Embed Funnel in your App (tailscale.dev)

- Globally distributed Elixir over Tailscale · Richard Taylor

- Silicon Valley Bank Shutdown: Implications for Startups and VCs | Fintech Friday

- pgrok/pgrok: Poor man’s ngrok – a multi-tenant HTTP reverse tunnel solution through SSH remote port forwarding (github.com)

- US regulators bail out SVB customers, who can access all their money Monday | CNN Business

Day 54 of #100daysofhomelab

Day 54 of #100daysofhomelab and it’s going to be a very quick one… My head is wrecked with TrueNAS… Swapped TrueNAS Core (FreeBSD) to TrueNAS Scale (Linux). Trying to get Resilio Sync to work on it, but getting permissions issues… It’s after 2 am here, so giving up for the moment, but hopefully, I can figure it out tomorrow… On a different note, I ordered a load of storage upgrades (Another Hyper M.2 x16 card, some new NVMe drives, and some other stuff) for GodBoxV3… More details soon…

Day 53 of #100daysofhomelab

Day 53 of #100daysofhomelab and It’s been a busy week… ish… I’ve been battling with Vertigo on and off this week, so haven’t don’t a lot. I did, however, fix some issues with the network, set up a proper failover WAN connection using SmoothWAN and my Quad 2.5Gb Box, and have started making major changes to GodBoxV3.

Originally, GodBoxV3 had all spinning disks (8 8Tb drives shucked from WD My Book 8TBs or 8TB Seagate IronWolf) in a single RAID 5 pool in Windows Storage Spaces. Then the NVMe drives were a second pool (5 of them, 4 Force MP510 480Gb NVMe SSDs on a Hyper M.2 x16 card and a 5th unbranded one of a 1X PCI-E add-in card) and a third pool of 2 960Gb IronWolf SSDs.

I deleted the RAID5 and NVMe arrays, and now, for testing, I have spun up a TrueNAS Core VM on the 2 SSDs and passed the NVMe and HDDs into that VM. Windows can still “see” them, but they are marked as offline, but Hard Disk Sentinel and CrystalDiskInfo can both see the SMART status of them (TrueNAS cant, weirdly…). Then, I have 7 of the 8 drives added to a single pool (one is failing so I left it out, this is for testing currently, anyway) and then the 5 NVMes are added to a second pool (the plan is to use the 4 Force MP510s or replacement drives as a single pool, then the other NVMe (or maybe even 2) as a Cache or Log for the Spinning disk pool).

So far, doesn’t matter if I am using the NVMe or HDD pool, but speeds from my Mac (with a 10Gb Thunderbolt adapter) are around the same… Might be a config issue, might be the odd NVNe drive slowing the rest down… but I am happy with the speeds so far… I have seen 3-400Mb/s Writes and 900+ Reads on both NVMe and HDD… Most of that is probably cached… the VM has 64Gb RAM given to it, and the test file was only 5Gb (BlackMagic Disk Speed Test). More testing is required though.

ZFS over multiple DVD/BD-R images

A couple of days back, I started thinking about archiving and backup software. I kind of have backups “sorted”, with my MacBook Pro using BackBlaze to backup to the cloud, Time Machine backing it up to my Synology, my VMs on Proxmox being backed up to Proxmox Backup Server off-site, my Synology and QNAPs being backup to B2 and Hetzner and some other bits and bobs… But for the Archiving stuff, I am not really set up… So, I went looking for archiving software. Couldn’t find anything, so asked on r/DataHoarder. Still no options, at the time of posting, but someone did reply with the idea of using DVDs (or Blu Rays) for ZFS...

Ok, that’s just crazy, but in a kind of a good way… kind of like the floppy RAID stuff I have seen… It does help with the storage of data, plus allows for potential loss of data… but it needs some automation to get it fully perfect…

Assuming you are using this for archiving, you could automate building 5 ISOs, just shy of 100Gb each, once a month, create ZFS ZRAID 2 or 3 (depending on how paranoid you are) and then write your data to it. ZRAID lets you lose 1 disk, giving you around 400GB of usage. Z2 brings that up to 2 losable disks, and 300Gb and Z3 is 3 disks and 200GB. I think Z2 would be your best bet, especially if you are using something like MDisk and are storing them safely.

Once finished, unmount and send an email saying you need to write the ISOs to disk. Label each disk with a unique serial number (this is where the archiving software would be handy) plus the set details and number (so, March 2023 Disk 1/5).

If you need something from that backup you stick it in the drives… You can do it with multiple drives, so with 5 disks and ZRAID, you need to mount a minimum of 4 of them. ZRAID2 needs 3 and ZRAID3 needs a minimum of 2… Ideally, you would want 5 of them, allowing you to check all disks (ZFS Scrub) and then get your files off.

A year of archiving would require 5 drives (say 100 quid a pop, USB makes things easier... Internal is possibly cheaper) and 60 disks (I Found 25 100Gb MDisks disks on Amazon for around 500 EUR) costing a total of maybe 2k, with 15 extra disks…

Follow-up questions:

- Does ZFS allow the mounting of read-only?

- Could you do this with Rewritable BluRay disks? Could they be mounted directly and written to? Leave them in the drives for the month, let writes do their thing and then archive them once a month? It’s archived, so it doesn’t need to be fast…

Recent Posts

Archives

- December 2024

- November 2024

- April 2024

- November 2023

- July 2023

- June 2023

- April 2023

- March 2023

- February 2023

- January 2023

- August 2022

- June 2022

- April 2022

- March 2022

- February 2022

- November 2021

- November 2020

- October 2020

- July 2019

- April 2019

- September 2018

- July 2018

- April 2018

- March 2018

- September 2017

- August 2017

- May 2017

- March 2017

- January 2017

- November 2016

- September 2016

- July 2016

- June 2016

- May 2016

- April 2016

- March 2016

- January 2016

- September 2015

- August 2015

- July 2015

- June 2015

- May 2015

- April 2015

- March 2015

- February 2015

- January 2014

- May 2013

- January 2013

- December 2012

- November 2012

- October 2012

- September 2012

- August 2012